It might seem strange for me to be using artificial intelligence (AI) after ranting about it ripping me off, but I’ve used A1 (as Bob Mortimer calls it) tech since 2015’s gimmicky Deep Dream. The issue for me is that I’m expected to pay for a technology that is leeching off this very blog for free? That’s a fucking cheek (welcome to capitalism, kids!) and really they should pay royalties for the images they use, or at least give free access to artists. But I see it more like photography, it won’t replace what I do, but it’s a new technology for artists to (ab)use.

Over at Radio Clash, my blog/podcast is going through a re-design, and I’m working on new featured imagess and evolving the background graphics. I regularly create digital pieces for the covers of my podcasts, mashups and various graphics for the site. I’m not sure why I mostly don’t post them here – partly cos I still have that ‘artist vs designer’ mindset. The digital pieces seem rather functional for me, more design than art, but recently that line between traditional media and digital has been blurring especially with AI image generation.

Is there any ‘art’ in artificial intelligence? We’ll find out!

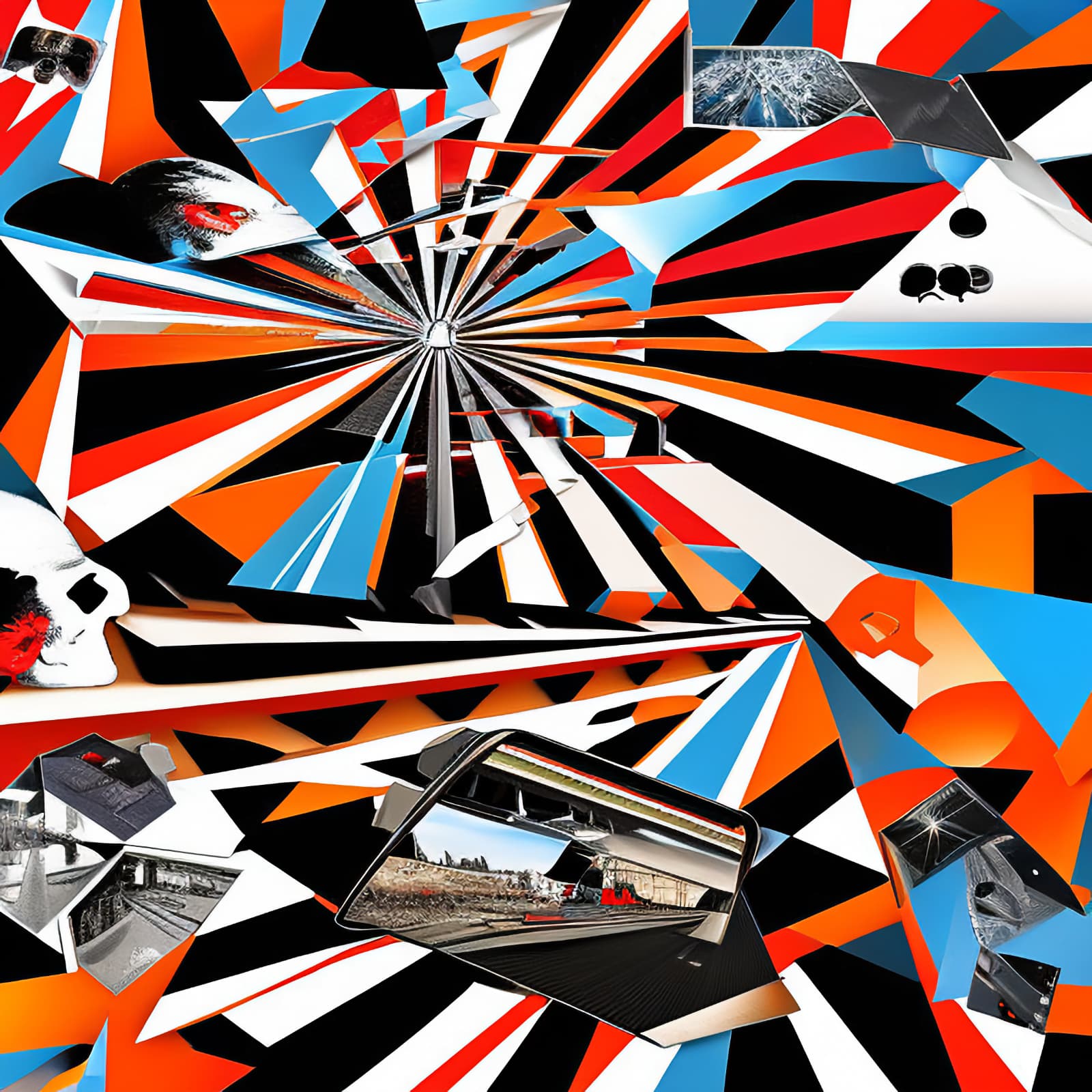

So firstly here’s an example of my mashup collage techniques, what I am trying to get AI to recreate. I call these Google collages, where I used to Google a single term and just use what Google image search found. Art comes from restriction, but this is legally dodgy, and I’ve had legal threats over images used on the blog in the past. So the old brand logos, film stills, even someone’s home snapshot (!) bother me. Even though I do fight the good fight re: fair use, in reality I’ve found photographers and artists get really arsey about ANY use of their work – even when used in legal fair use exceptions like newsworthiness or creative transformation.

You can hopefully see the overlap between this and my larger Abstract work – angles, lines, colour, mutliple colour strokes, kitsch/camp imagery, patterns – there is a lot of overlap, the abstracts were intended in part to be an offshoot of my digital collages.

Weirdly I find musicians tend to be less precious (Don Henley excepted) but photographers see their images as some retirement plan, and employ copytroll organisations to squeeze non-profit blogs and fan sites for money. Luckily I’ve found you can just ignore these orgs, they are just trying it on, cos unless you are monetising the image, and have good reason for it to be there and comply with the takedown there’s little they can do.

The old Soviet postcard or older graphics, probably out of copyright by now. I created the glitch stuff too – but let AI ‘remix’ it to give a more legal standing with using my original as a seed image. Since AI imagery is created from scratch it thus should be less dodgy (although it’s all a bit of a grey area at the moment about who really owns the products of a machine trained). The idea was to have something mostly not reliant on these dubious images, but look the same, or better:

The second piece is a composite of several attempts at varying degrees for AI to take my first image and create it’s own version via the prompts, this actually was the end of the process – we’ll go into how I got there shortly. There are some pretty way out versions, and the golden skull and ‘HAL’ (not from 2001, an AI graphical recreation) actually come from pure (i.e. prompt lead) AI generations afterwards. Also had to put some of the original textual elements back in, because AI image generation can’t do text, it mangles it.

It’s probably a good idea to explain how AI image generation works, although the actual technology is way beyond a blogpost. AI is really machine learning, and the systems such as OpenAI’s DALL-E 2, Stable Diffusion as mostly used by PlaygroundAI, NightCafe, Disco Diffusion and Midjourney (I’ve used all of these) rely on a model database of billions of images (like the LAION5B I mentioned had naughily trawled images from this site, and yes I’ve asked for them to be removed). The images are related to text in the post and captions, creating a semantic-visual relationship.

That massive model database is then used by the machine learning algorithms to ‘learn’ what certain things are, and can create ‘new’ images from that data via textual prompts using natural language pattern matching. It might seem like it’s a magic technology where everything is a push of a button with no work involved. This is not true; like all digital creations it’s easy to create something that’s okay: generic, stock art – but hard to add the Ghost In The Machine, the humanity and soul back in…that requires a LOT of prompt hacking – prompt engineering is what they’re grandiosely calling it now.

Also the fact it’s drawing from billions of badly designed and sometimes bigoted images means you have your work cutout for you. (Fuck ‘trending on Artstation’ – terribly banal generic fantasy/anime/game designs and imagery, my designs improved 10000% when I banned that shit, I had to ban ‘girl’ cos otherwise your work gets infected by scantily clad Manic Pixie Dream Girls – magical virtual girlfriends for basement dwelling neckbeards and teens, I guess?)

So the prompts you give AI are as much about what you don’t want as much as you do, and the better systems allow you to restrict certain things, otherwise it’s a rather bland shitshow of stock art tropes and bad design. DALL-E 2 is bad for this, it’s amazingly quick, but tends to be fairly meh. Good for stock art style images to depict something, or manual collages. But I wanted to recreate my style by machine – so that’s out. I mostly used Midjourney til I ran out of free images, and then found PlaygroundAI which I highly recommend, especially on the 2.1 Diffusion model.

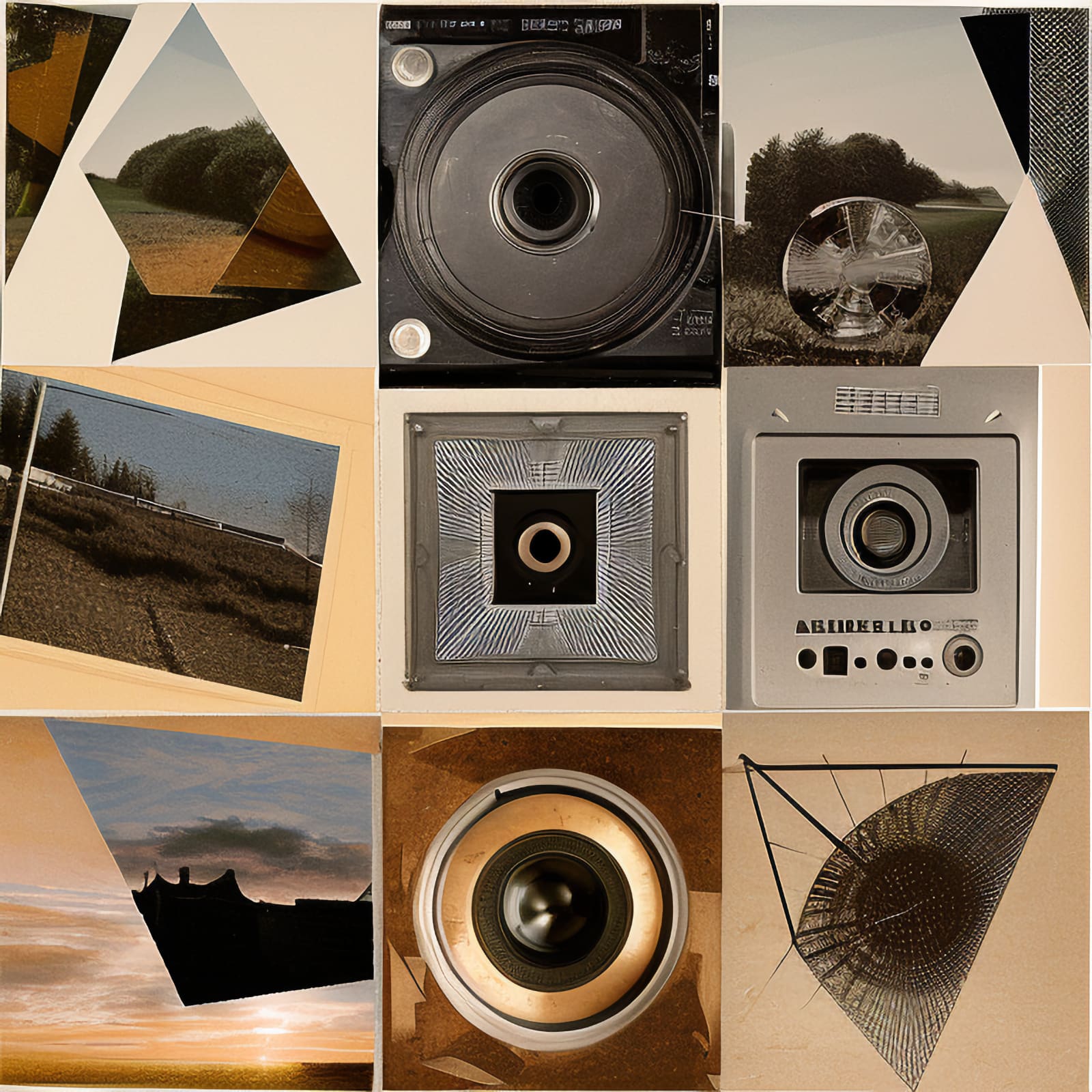

The flags above were fairly random – not sure why it chose to do that, but I was playing around with constructivist angles. The AI I was using likes grids, symmetry and order – which as you can see from my own collage that I rarely use boxes, squares or keep anything at the same angle. Big fan of Dadaist and Constructivist collages, so the angled cutups and shapes are a reflection of that. It was quite a struggle to stop AI from reverting to grids, and images in boxes and squares – it still does with my prompts, but when I found the ‘constructivist’ term it started doing more vorticist angular shapes everywhere.

Not sure why flags? I didn’t prompt that.

So taking it further, I managed it to combine photographs that weren’t in boxes, and combining and overlaying those images over it. At first – it didn’t get that I wanted combinations, or put masses of square/rectangular images in, so had to reduce the amount (sparse) and try and get the composition to be off-centre or something less ordered.

I think for some of these it was still partly following the constructivist colour scheme, but varying where the terms were and increasing other things seemed to change that. Also had a struggle with lines, AI seems to get obsessed with one thing sometimes – either you’ve put it in twice or early on in the prompt and it gloms onto that and does nothing else!

Midjourney seemed to get more intuitively what a mashup collage/illustration might be like, but with only 30 or so images it’s too limited. And again, not paying shit for stolen work…pay me or give it to me for free! Here are two images from Midjourney – the Discord interface is a pain, don’t like that either but it seems to have better images from the get go. Not great, I had to do some editing to the kids because they either looked rather racistly deformed or strange. They aren’t 100% still.

That’s one of the points – it’s rare to have one of the AI images hit it out the park without editing after. Glitched occur, strange deformities (which can be helped with prompts) and the gibberish Simlish cod-latin text it generated. AI does not understand language. I quite often have text banned as a thing, or logos/typography.

So we can see here how the grids keep popping up – and symmetry. I banned symmetry after the AI got obsessed with that, as well as aligned things – I am not opposed to layered boxes and squares at angles as long as it’s not aligned grids, asked it to randomly rotate the images. It then got obsessed with triangles!

After a while, adding ‘digital glitch’ and playing with banning certain things it kept doing, it started it get what I do.

The interesting thing of doing this is not only does it force you to think about what you do and what inspires you – the list is long for these collages. It ranges from Soviet constructivism and space race imagery, 50’s cheesy sci fi imagery, 8-bit images and skull and crossbones (yarr!), archaic music technology such as old hifi and cassette tapes, 80’s hpme snapshots, Victorian photography and etchings, retro-futurism and surreal imagery, and just random Google stuff.

The spooky thing is after at the end feeding my Radio Clash background collage to it, the AI seemed to learn from that, and although the few subsequent images didn’t use that image as a seed (usually AI has a random pattern of noise to ‘grow’ the image from, but you can use an image instead) they started using bits from the background in them! Like the skull with headphones, and the image of HAL from 2001. So I composited those into the background as well.

I think AI offers an interesting challenge for artists – some bad, some good. If it can stop being so generic and learn from your own dataset, your own work or voice, I think that will be a useful future tool. One where you could sketch or create what you want in rough form, and let the it create variants til you have what you want in your style. But you need to guide it, and create something unique – or have a unique vision – in the first place for it to even fly.

So artists will never be made redundant by this technology – in fact the exact opposite – with the world of choices at your fingertips, you’ll need someone with very deep visual and historical knowledge and a vision of how things should be to guide you. It’s shown by the frankly crap ‘AI art’ out there – generically ripping off artists who were never that good in the first place. Garbage In, Garbage Out. I avoid using prompts from living artists (because: that shit it evil and wrong), and usually avoid presets, because otherwise what comes out is the unimaginative dreck you’re seeing everywhere.

Fantasy/anime pseudo Thomas Kinkade tacky magical fae/D&D bullshit we’ve all see before. Or ‘cyber’ ‘techno’ ‘Mad Max’ ‘goth’ variants of such. (And don’t get me started on that horribly glitchy AI animations that looks like the a-Ha Take On Me video but drunk….I used to be an animator, and that shit is fucking awful. Maybe the new Stable Diffusion’s Gen-1 will buck that drunk animator with heavy DT’s trend?).

When Artificial Intelligence stops being so generic, then it will be amazing.

Or more accurately, when the prompt engineers get some visual education beyond tired anime/Heavy Metal cliches. What I’m seeing out there is the depressing representation of the utterly dire state of school art education and imagination in 2023…people cloning the same tired old prompts and making Greg Rutkowski very angry indeed. (But what gets me: he’s not actually that good? There are far better artists to be inspired by, like the old masters which his generic art relies heavily on. Artists that aren’t alive anymore and they won’t get upset. Why use a third generation D&D knock off with a Goya / Velasquez fetish? Lazy.)

Leave a Comment! Be nice….